Posted in Uncategorized | Leave a Comment »

With virtualization showing up everywhere and Cloud this and Cloud that being referenced on a daily basis, I thought I would explore a concept we have been thinking about at Superna “Virtual networks”. I have an interesting job as I get to tinker with technology and see if it’s got any commercial value before bringing new products and solutions to market. I decided to experiment on the feasibility of building a virtual network, with virtual nodes (routers, Switches, optical devices) that operate exactly like the real device but I wanted to leverage Cloud computing. The key question to ask is “Who needs this solution?” I see many reasons this solution has value, here are few examples I’m sure there are more 1) customers could mock up a network and test what if scenario’s before making a buying decision on new equipment and see if network convergence (OSFP, BGP, Optical) or latency meets their business requirements 2) network management vendors often struggle with the cost of buying real devices to integrate into their management platforms. A much cheaper alternative is simulated nodes that have the same SNMP, TL1 or CLI interface of the real device, this dramatically reduces the cost and allows for large complex network to stress test management applications without the expense of hardware 3) Learning how to provision and operate network equipment requires an expensive lab environment but with virtual networks and management software, the lab itself can be virtualized in the Cloud.

The test I setup was validating a virtual optical network with simulators that ran in Amazon’s Elastic computing environment (E2C). I wanted to see if our management software could interact with virtual nodes that were hundreds of milliseconds away over the Internet hosted in a Cloud offering. The test setup used 30 virtual optical simulators to represent a 30 node network.

The final results show it’s feasible and everything worked as expected, with 25 alarms a second from Amazon simulators testing our management software over a 400 millisecond connection. Entire networks with 100’s of nodes can be turned on with the click of button and turned off when not needed saving on power and space versus traditional hardware based testing models.

Stay tuned for updates on this project.

Posted in Cloud Computing, Network Management, simulators, Uncategorized, virtual networks, Virtualization | Tagged cloud computing, emc, EMC Ionix, network management, network management system, network outage, optical networking, virtualization | Leave a Comment »

With Cloud computing topping all CIO’s lists of priorities, I start to wonder what applications will actually get deployed in the Cloud. If you look at the applications that “run the business”, I suspect these will be the last applications to find their way into the Cloud due to security, performance and support being so critical. I don’t often see management software as a target to move outside of the datacenter. There are managed services available from service providers but they are very expensive to outsource to a service provider. I see the potential for hosted management software that executes remotely but monitors and manages a network as being a lower cost approach that allows a customer to quickly enable management of a network and distribute a view to anyone that needs access. Typically, managing, patching or adding new device support to this type of software is time consuming along with expanding the features requires a lot of planning. Virtual management software in the Cloud maybe the answer.

Posted in Business Continuity, Cloud Computing, EMC Ionix, Network Management | Tagged cloud computing, EMC Ionix, hosted software, network management, NMS, optical networking | Leave a Comment »

Continuing the discussion of spatially enabled network data, there are some great reasons for taking the additional step of distributing this data to the telecom mobile field force. Send it right to their laptops and cell phones while they are resolving an outage or engaged in routine maintenance. There are at least two great reasons for doing this:

1. Efficiency. All field tasks will be done faster and more accurately, once workers can see the precise location of network equipment, anticipate job requirements, search for the nearest spares, and contact co-workers with detailed information. It really cuts down on the need to first inspect the site, then drive back to the shop for tools and equipment.

2. Buy-In. As the field force starts actively using spatial data and recognizing its value, they naturally become motivated to maintain that data with corrections from the field. Today’s applications have good tools for ‘redlining’ or updating data from a mobile device. Once the field force buys in to the process of updating network data as part of their work, the corporate data becomes more accurate, reflecting the ‘as built’ network.

Benefits return to the NOC manager and VP of network operations in the form of more timely and accurate reports on the network, to better support management decisions.

Network and spatial data have been converging for some time, but with increasing scope. As Sherlock Holmes would say, “the game is afoot” now with deals such the recent partnership of Nokia and Yahoo (http://news.yahoo.com/s/ap/20100524/ap_on_hi_te/us_tec_yahoo_nokia).

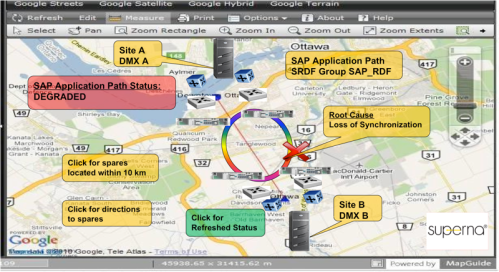

At Superna, we are developing plug-ins that spatially reference EMC Ionix network data. Here is a one-minute video that demonstrates how our Network Discovery Engine can spatially enable the alarm notification system of EMC Ionix and report results to a mobile device.

Posted in EMC Ionix, Mobile Data Application, Network Management, Spatial Data for Network Management | Tagged accessanywhere, business continuity, disaster recovery, EMC Ionix, network management, NMS, optical networking, root cause, spatial data | Leave a Comment »

Server virtualization has changed the way IT operates, and is now well established. The promise that virtualization can enable low cost CPUs and storage in the Cloud provisioned in seconds for any IT department on demand seems too good to be true. I just returned from EMC World 2010 and got to witness the “everything thing in the Cloud” pitch based on virtualization technologies.

Aside from the aging Counting Crows concert put on by EMC,

the most interesting announcement from the show was the vPLEX product line and “Access Data Anywhere” technology. This promises to allow application data typically residing in the SAN (storage area network)—which for years has been orphaned data inside a fibre channel island—to be accessible by any application, regardless of physical location.

It’s about time fibre channel data was easier to network but a real challenge emerges: terabytes of information that never left the network are now flowing over network links without any predictability as to when or where it’s going. Today’s management applications are poorly designed to handle a network and application workload that is free to execute or run anywhere in the network. I see the old adage about “location, location, location” coming into play with such a dynamic IT environment. Management software will need to know where data is flowing, where it’s coming from, where it’s going, and over which network links. This will need to happen in real time, as the network has become too complex for humans to make sense of the data without some level of automation. Management software needs to make decisions and raise alarms and show trending based not only on devices but also location or locations.

Let’s look at the example of “follow the moon computing” , where workloads in North America migrate to Europe at the end of the work day to take advantage of lower power, available CPU power and lower latency to end users before moving on to Asia. Terabytes of information starts moving and applications never stop running with some executing in North America some in-flight to Europe or Asia. If something fails, where is the latest copy of the data? Where was the application actually running last? Where was the application and data going? Here again, location will be very important for answering these questions and leveraging Cloud computing’s promise of lower TCO. The future of geospatially enabled management systems seems like a promising technology breakthrough.

Posted in Cloud Computing, Network Management, Spatial Data for Network Management, Virtualization | Tagged accessanywhere, cloud computing, emc, EMC Ionix, emc world, network management, spatial data, virtualization, vplex | Leave a Comment »

Disaster recovery is more complex today than ever before. The introduction of virtualization into every layer of the OSI stack means the number of troubleshooting steps and devices in the data path has expanded tenfold. Clearly, efficient network management demands a new approach.

This point was made recently in the NetQoS blog, Network Performance Daily:

The main concern that the lack of visibility presents to enterprise IT shops is the idea that mission critical applications that performed fine before virtualization may perform poorly when virtualized, and the IT shop will have no way of being proactive in finding performance problems, nor will they have the tools they need to quickly find the root cause of the problem.

Mission critical applications are generally replicated over a protocol, like EMC’s SRDF, between data centers. The WAN network used is typically WDM, SONET/SDH or more recently a shift towards IP networks. I have experienced customer networks with business impacting issues takes months to find and resolve the root cause. One constant I have witnessed is large complex applications and networks need accurate inventory and topology to help narrow down the search for a root cause issue. The EMC Ionix product targets cross-domain fault correlation and topology, but it has gaps with device support. Targeted solutions for end-to-end path management still don’t exist.

The industry has to move beyond the protection of devices on the network. Monitoring and securing the entire data path is necessary to achieve the next level of business continuity protection.

Posted in Disaster Recovery, Virtualization | Tagged business continuity, disaster recovery, EMC Ionix, network management, optical networking | Leave a Comment »

How can you manage a network without access to spatial data? Badly!

Electrical networks have known this for a long time, but too many telecom network managers rely on logical diagrams and online spreadsheets. As a result, they are unable to visualize the ramifications of physical threats to the network, such as floods, fires, or traffic accidents. Nor can they fully determine the root clause of network outages that could be geographically correlated. It takes much longer to determine the affected equipment at a location and identify the nearest source of spares. When your outside plant infrastructure is visible on a street map or aerial photo, such relationships are easy to spot.

Adding the spatial dimension brings many benefits beyond root-cause analysis. In particular, it helps managers to work proactively, preventing problems and saving costs. For example, if you know the river is rising, your 3D spatial map identifies the affected area for a range of flood scenarios, and shows which equipment to protect or shut down before the waters are lapping at the door. As a result, you can dispatch crews to work more safely in dry conditions. If several alarms pop up in your management system, immediate access to a map of their location might show you the cause and identify other equipment at risk. You can also use network trace algorithms to identify upstream and downstream effects of local issues.

This figure shows an example of how a network event could be displayed with spatial context and additional planning data.

Fortunately, with sources such as Google Earth and the Shuttle Radar Topography Mission (SRTM), network managers now have easy access to spatial data for everywhere on Earth. They also have Web-based applications such as MapGuide (mapguide.osgeo.org) that are easy to use and extend for a wide range of map-handling purposes.

Network planners have been using spatial technologies for wireless applications and we are seeing the expansion to other network systems. Returning to network management, with today’s technology spatial data can be pushed from head office to mobile devices in the hands of maintenance staff on location. They can use the data to work more efficiently, and with a few simple editing tools they can add notes, photos, and corrections to the maps.

EMC Ionix has demonstrated a great capacity to integrate NMS data from heterogeneous systems. But the power of the suite could be vastly enhanced if it were spatially enabled, adding both geospatial data and algorithms for proximital analysis, network trace, and so on. Fortunately, such developments are underway. More about that later!

Posted in Network Management | Tagged EMC Ionix, network management, network management system, network outage, NMS, root cause, spatial data | Leave a Comment »

Network security is moving into a more advanced state, and many organizations are exploring new strategies to satisfy emerging requirements, such as application end-to-end security. We also see traditional storage applications like EMC’s SRDF or specific line-of-business applications that require different levels of security on a shared network.

A key weakness in the adoption of more widespread Cloud infrastructure is the extent to which the Cloud can be secured. The characteristics of this security are high speed (wire rate) and conforming to industry standards.

The responsiveness of the Cloud and the emergence of new elements such as the hypervisor are welcome, but they do not address the basic problem of transmitting data securely on an undefined, unsecured network. This is especially worrisome for enterprises that contract network services from a commercial service provider. In addition to sharing the network with other customers of the SP, they also share physical locations of virtual servers on the storage media maintained by the SP. The possibilities for threats and data contamination are multiplied and ever-changing in the Cloud.

One strategy that has worked well for us in today’s networks is a new model of encryption service. The SP maintains an encryption framework, while the enterprise customer retains control over the generation and distribution of security keys used in their data transmissions. This service model seems to meet the business interests of both parties.

The SP is most concerned with providing a reliable service to multiple customers, but does not want to get bogged down in the minutiae of key distribution. In fact, their network is probably more secure if the SP does not know the keys.

Meanwhile, the enterprise customer is free to determine the appropriate level of security for different data types, and knows what is happening with their keys at all times.

For more information, see our white paper “Securing the On Ramp to Hosted Services”.

Posted in Cloud Computing, Network Security | Tagged cloud computing, encryption, network security | Leave a Comment »